Ease of mind

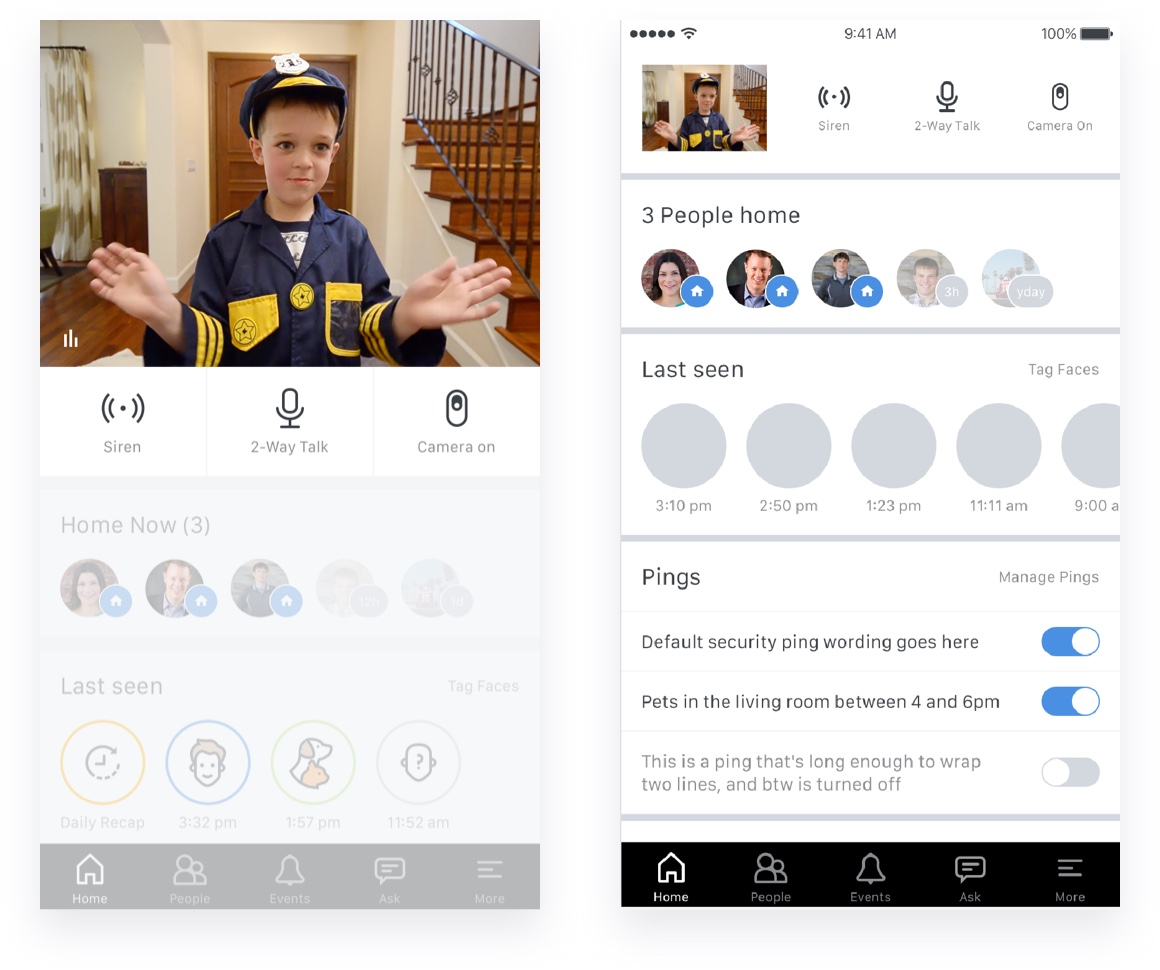

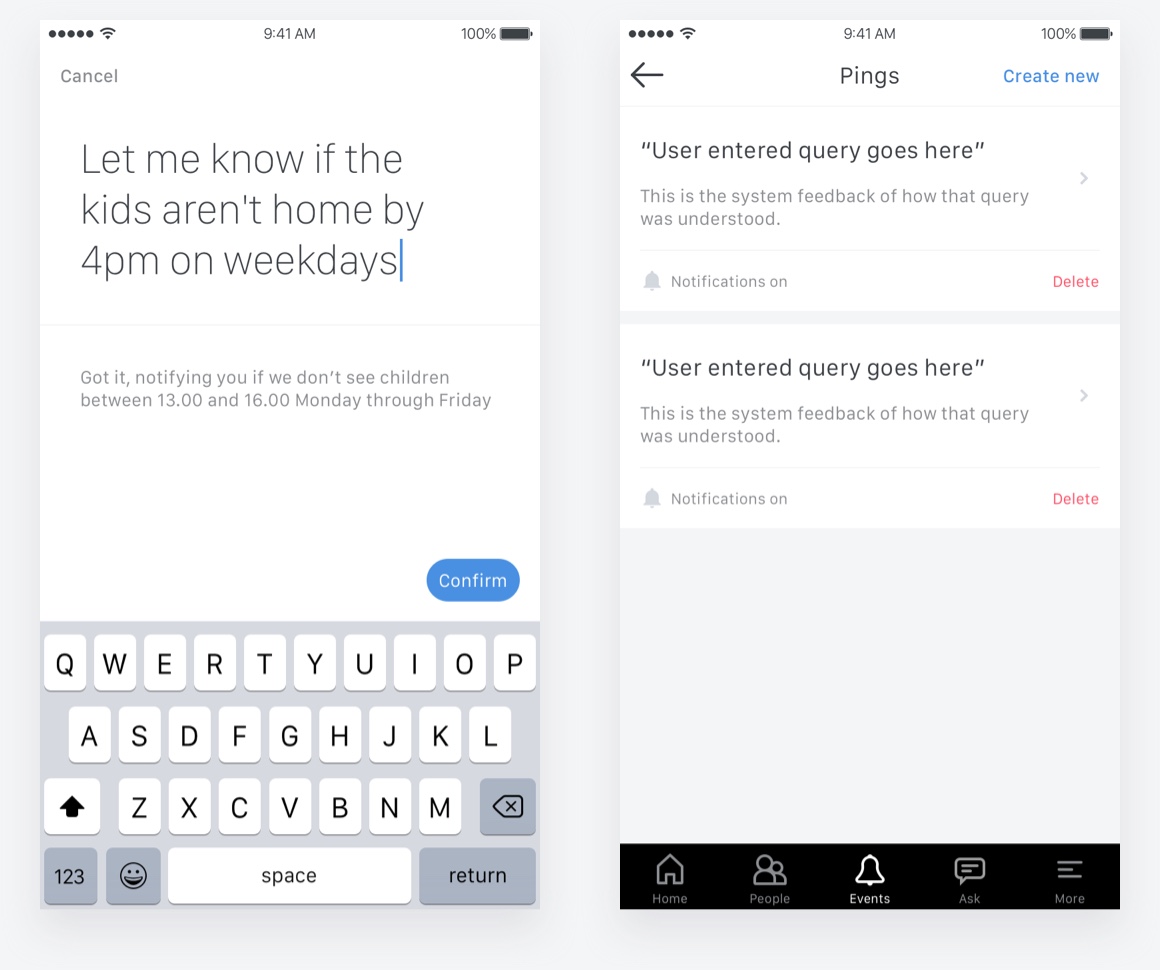

Through our studies we found that users perceived the livestream to be the most important feature. However in reality most times when you open the app it is unlikely to see any activity, unless you’re led there by an external trigger. For the perceived importance the stream was kept front and center in the UI, but several experiments were conducted on how to reduce the stream real estate upon interaction with other elements on the screen.

of respondents purchased primarily for home security reasons. Close second is general peace of mind.

considered the livestream the most important feature to achieve that goal.